TF-IDF Vs BERT

Text feature extraction is the process of converting raw text data into a numerical or structured format that can be used as input for machine learning algorithms, statistical analysis, and various other data-driven tasks. Text data, in its natural form, is challenging for many algorithms to process directly because they typically require numerical input. Feature extraction transforms text data into a more suitable representation while retaining meaningful information.

Common techniques and methods used for text feature extraction

- Bag-of-Words (BoW): This approach represents a document as a vector of word counts. It ignores the order of words and focuses only on their frequency. Each word becomes a feature, and the count of each word in a document becomes the value for that feature.

- TF-IDF (Term Frequency-Inverse Document Frequency): This is a variation of the BoW approach. It assigns a weight to each term based on its frequency in the document and its rarity across the entire corpus. Terms that are frequent in a specific document but rare in the entire corpus are given higher weights.

- Word Embeddings: These are dense, continuous-valued representations of words that capture semantic relationships between words. Techniques like Word2Vec, GloVe, and fastText generate word embeddings, and these embeddings can be used as features for downstream tasks.

- Sentence Embeddings: Similar to word embeddings, sentence embeddings capture the meaning of entire sentences or paragraphs. Models like BERT, GPT, and various encoder-decoder architectures generate sentence embeddings that can be used for tasks like sentiment analysis, text classification, and more.

- Topic Modeling: Techniques like Latent Dirichlet Allocation (LDA) help extract topics from a collection of documents. Each document is then represented as a distribution of topics, and these distributions can be used as features.

TF-IDF

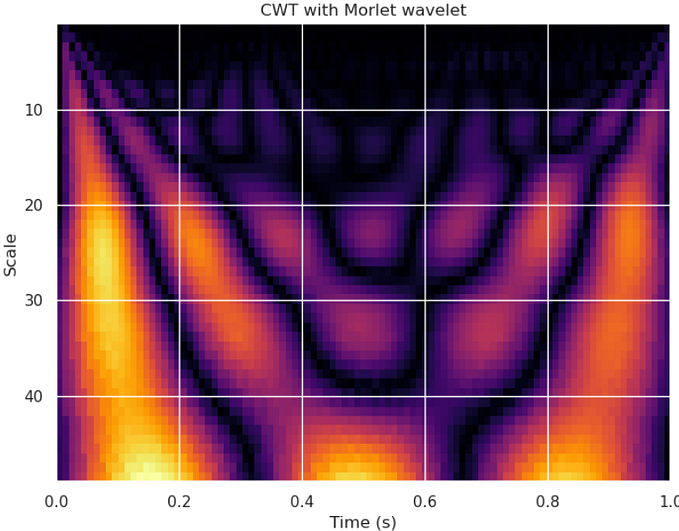

A TF-IDF matrix is a numerical representation of a collection of texts (documents) in a format that reflects the importance of each word within the documents in relation to the entire corpus. TF-IDF stands for Term Frequency-Inverse Document Frequency.

Here’s a breakdown of the terms and the process:

- Term Frequency (TF): This measures how often a term (word) appears in a document. It’s calculated as the number of times a term appears in a document divided by the total number of terms in that document. It helps in giving importance to words that are more frequent within a specific document.

TF: (Number of occurrences of the term in the document) / (Total number of terms in the document)- Inverse Document Frequency (IDF): This measures how unique or rare a term is across the entire collection of documents. It’s calculated as the logarithm of the total number of documents divided by the number of documents containing the term. It helps in giving more importance to words that are rare across the entire corpus.

IDF = log((Total number of documents) / (Number of documents containing term))- TF-IDF Score: The TF-IDF score for a term in a document is the product of its TF and IDF values.

TF-IDF = TF * IDFBy calculating the TF-IDF scores for each term in each document of a collection, you can create a matrix where rows represent documents and columns represent terms. Each cell in the matrix contains the TF-IDF score of a term within a specific document. This matrix is called the TF-IDF matrix and is a way to represent textual data in a numerical format that captures the relative importance of words within documents.

Conclusion

In conclusion, TF-IDF (Term Frequency-Inverse Document Frequency) is a technique used for text feature extraction and representation in natural language processing (NLP). It quantifies the importance of words within a document relative to their occurrence in the entire corpus. TF measures the frequency of a word in a specific document, while IDF measures the rarity of the word across the entire collection. The TF-IDF score for a term in a document is the product of its TF and IDF values.

TF-IDF is utilized to transform textual data into numerical values that can be used as input for various NLP tasks such as text classification, clustering, and information retrieval. By assigning higher scores to terms that are frequent in a document but rare in the corpus, TF-IDF captures the specificity and significance of words within their context.

Furthermore, BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking pre-trained contextual language model that captures rich contextual information by considering both the left and right contexts of words. BERT embeddings are the contextualized word representations learned during the pre-training phase. This approach has led to impressive performance improvements in a wide range of NLP tasks. BERT falls under the category of pre-trained contextual language models, which have reshaped the landscape of natural language processing by capturing intricate relationships between words and sentences.

Both TF-IDF and BERT are integral to modern NLP, enabling the transformation of raw text into structured formats suitable for analysis, machine learning, and various applications. These techniques highlight the evolution and sophistication of language processing technologies that continue to drive advancements in understanding and utilizing textual information.