Process of data in Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of Artificial Intelligence (AI) and computer science that focuses on enabling computers to process, understand, and generate human language. NLP techniques are used in a wide range of applications, from voice assistants like Siri and Alexa to chatbots, sentiment analysis, language translation, and more.

NLP involves a variety of techniques and methodologies, including machine learning, deep learning, and rule-based approaches. These techniques are used to analyze and transform text data into a format that can be easily understood and processed by computers. The goal of NLP is to enable computers to interact with human language in a way that is natural and intuitive, allowing for more seamless communication and interaction between humans and machines.

NLP has made significant progress in recent years, due in part to the availability of large amounts of text data and advances in machine learning algorithms. As a result, NLP is becoming increasingly important in fields like healthcare, finance, and marketing, where it is being used to analyze and extract insights from large volumes of text data.

The process of data in Natural Language

Processing (NLP) involves transforming raw text data into a format that can be easily understood and analyzed by computers. This process typically includes several steps, which may vary depending on the specific task and the nature of the data:

- Text Preprocessing: This step involves cleaning the text data to remove any unwanted characters, symbols, or numbers. This can include tasks like removing punctuation, converting all text to lowercase, and removing stop words (common words like “and” or “the” that do not carry much meaning).

a Python example of how to perform text preprocessing using the NLTK (Natural Language Toolkit) library.

This code performs several of the text preprocessing techniques we mentioned earlier, including converting the text to lowercase, removing punctuation, and removing stop words. The resulting tokens can then be used for further analysis using NLP techniques

import nltk

nltk.download('punkt')

nltk.download('stopwords')

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

import string

# Define the raw text data

text = "The quick brown fox jumps over the lazy dog! What a beautiful day."

# Convert text to lowercase

text = text.lower()

# Remove punctuation

text = text.translate(str.maketrans("", "", string.punctuation))

# Tokenize the text

tokens = word_tokenize(text)

# Remove stop words

stop_words = set(stopwords.words('english'))

filtered_tokens = [token for token in tokens if not token in stop_words]- Tokenization: In this step, the text data is divided into individual units or tokens, which are typically words or phrases. This can be done using various techniques, such as splitting the text into spaces or using more advanced methods like natural language parsers.

- Part-of-speech (POS) Tagging: Once the text has been tokenized, each token is tagged with its corresponding parts of speech, such as noun, verb, or adjective. This is an important step in many NLP tasks, as it helps to identify the grammatical structure of the text.

- Named Entity Recognition (NER): In this step, the text is scanned to identify named entities, such as people, places, and organizations. This can be done using machine learning models that have been trained to recognize specific types of entities.

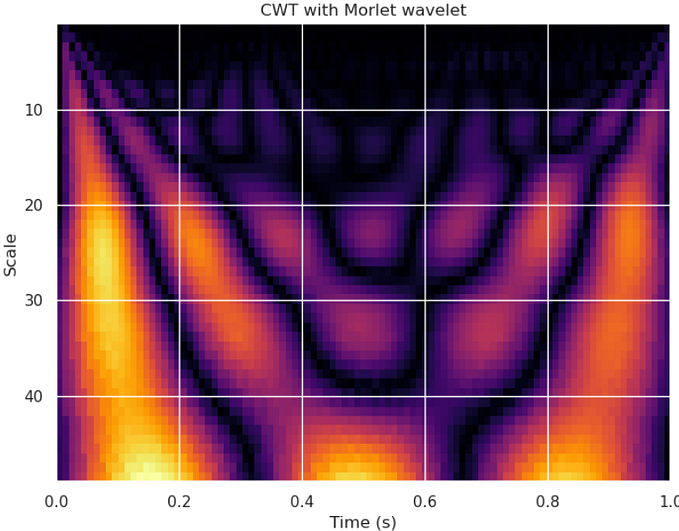

- Feature Extraction: This step involves transforming the text data into a numerical format that can be used in machine learning models. This can include techniques like converting words to vectors using word embeddings, or using frequency-based methods like bag-of-words or term frequency-inverse document frequency (TF-IDF).

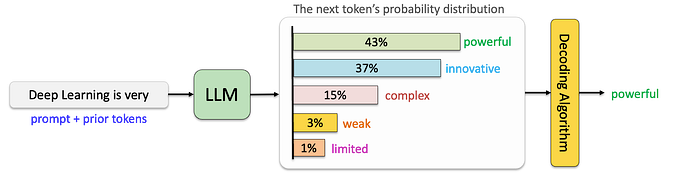

- Modeling: Finally, the transformed data is used to train machine learning models that can perform specific NLP tasks, such as sentiment analysis, text classification, or language translation. The models are typically trained on a labeled dataset and optimized to achieve the best possible performance on a given task.

Conclusion

natural language processing (NLP) involves a series of steps for processing and analyzing human language data, including text and speech. These steps typically include text preprocessing, feature extraction, and modeling, and can involve a variety of techniques such as tokenization, part-of-speech tagging, named entity recognition, sentiment analysis, and more. Python provides a range of powerful libraries and tools for performing NLP tasks, such as the NLTK library and the spaCy library, among others. With the increasing availability of large datasets and computing resources, NLP has become an increasingly important field with a wide range of applications, including chatbots, virtual assistants, machine translation, and more.