Multitask learning (MTL)

Multitask learning (MTL) is a machine learning approach that involves training a model to perform multiple tasks simultaneously using the same input features. In other words, instead of introducing a separate model for each task, MTL trains a single model that can perform multiple tasks.

The idea behind MTL is that by sharing the representation learned from a common set of features, the model can leverage the relationships between the tasks and improve its performance on each task. This is particularly useful in situations where the tasks are related and has some common underlying structure.

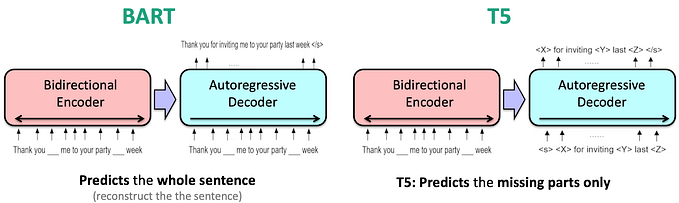

MTL can be used in various domains such as natural language processing, computer vision, and speech recognition. For example, a single model can be trained to perform tasks such as named entity recognition, sentiment analysis, and part-of-speech tagging on text data. Similarly, a single model can be trained in computer vision to perform tasks such as object recognition, segmentation, and detection.

Introduction

Multi-task learning (MTL) is a machine learning approach that involves training a model to perform multiple related tasks simultaneously using the same input features. In traditional machine learning approaches, separate models are trained for each task, which can be computationally expensive and lead to overfitting due to the limited amount of data available for each task.

In MTL, a single model is trained on multiple tasks by jointly optimizing a shared objective function. The model learns to capture the common underlying patterns and relationships between the tasks by sharing the learned representation across them.

MTL has been successfully applied in various domains such as natural language processing, computer vision, speech recognition, and drug discovery. For example, in natural language processing, a single model can be trained to perform tasks such as named entity recognition, part-of-speech tagging, and sentiment analysis. In computer vision, a single model can be trained to perform tasks such as object recognition, segmentation, and detection.

The benefits of MTL include improved performance on each task, reduced overfitting, and reduced computational costs. However, MTL also has some challenges, such as identifying the appropriate tasks to combine and designing an appropriate shared representation that can capture the underlying relationships between the tasks.

Overall, MTL is a powerful approach to tackling complex real-world problems that involve multiple related tasks, and it has shown promising results in various applications.

Mathematical Framework

The mathematical framework of Multi-Task Learning (MTL) involves jointly optimizing the parameters of a shared model across multiple related tasks. Let’s assume that we have a set of $n$ tasks, and for each task $i$, we have a training set of $m_i$ input-output pairs denoted by $(x_{i,j}, y_{i,j})$, where $x_{i,j}$ is the input feature vector for the $j$-th instance of task $i$, and $y_{i,j}$ is the corresponding output label.

The goal of MTL is to learn a shared model $f(x)$ that can perform well on all $n$ tasks. The shared model has a set of parameters $\theta$, which are learned by optimizing a joint objective function that combines the loss functions of all the tasks. The joint objective function can be formulated as follows:

\begin{equation}

\min_{\theta} \sum_{i=1}^n \frac{1}{m_i} \sum_{j=1}^{m_i} \mathcal{L}i(f(x{i,j}; \theta), y_{i,j}) + \lambda R(\theta),

\end{equation}where $\mathcal{L}_i$ is the loss function for task $i$, $R(\theta)$ is a regularization term to prevent overfitting, and $\lambda$ is a hyperparameter that controls the trade-off between the data fit and model complexity.

The loss function $\mathcal{L}_i$ can be any appropriate loss function for the corresponding task, such as mean squared error (MSE) for regression tasks, or cross-entropy loss for classification tasks. The regularization term $R(\theta)$ can be L1 or L2 regularization, or other types of regularization methods that encourage parameter sparsity or smoothness.

The shared model can be any type of model, such as a neural network, a decision tree, or a linear regression model. The shared model can have multiple branches, where each branch is responsible for a specific task, or a single branch that learns a shared representation for all tasks.

During training, the gradients of the joint objective function with respect to the model parameters $\theta$ are computed using backpropagation, and the model parameters are updated using an optimization algorithm such as stochastic gradient descent (SGD) or Adam.

In summary, the mathematical framework of MTL involves jointly optimizing the parameters of a shared model across multiple related tasks, by minimizing a joint objective function that combines the loss functions of all tasks and a regularization term to prevent overfitting.

Dataset example

Let’s consider a simple example of a Multi-Task Learning (MTL) dataset for predicting the prices of houses and apartments in a city. In this example, we have two related tasks:

- Task 1: Predict the price of a house based on its size (in square feet), number of bedrooms, and number of bathrooms.

- Task 2: Predict the price of an apartment based on its size (in square feet), number of bedrooms, and location (represented as a categorical variable with three possible values: “downtown”, “suburb”, and “rural”).

Conclusion

Multi-Task Learning (MTL) is a powerful machine learning approach that involves training a single model to perform multiple related tasks simultaneously using the same set of input features. The model learns to capture the common underlying patterns and relationships between the tasks by sharing the learned representation across them. MTL has been successfully applied in various domains such as natural language processing, computer vision, speech recognition, and drug discovery.

The mathematical framework of MTL involves jointly optimizing the parameters of a shared model across multiple related tasks, by minimizing a joint objective function that combines the loss functions of all tasks and a regularization term to prevent overfitting. During training, the gradients of the joint objective function with respect to the model parameters are computed using backpropagation, and the model parameters are updated using an optimization algorithm such as stochastic gradient descent (SGD) or Adam.

An example of a dataset that can be used for MTL is a set of related tasks in natural language processing, such as named entity recognition, part-of-speech tagging, and sentiment analysis, where a single model can be trained to perform all tasks simultaneously. Overall, MTL is a promising approach to tackle complex real-world problems that involve multiple related tasks, and it has shown promising results in various applications.