How to write our own Grid search in bash script

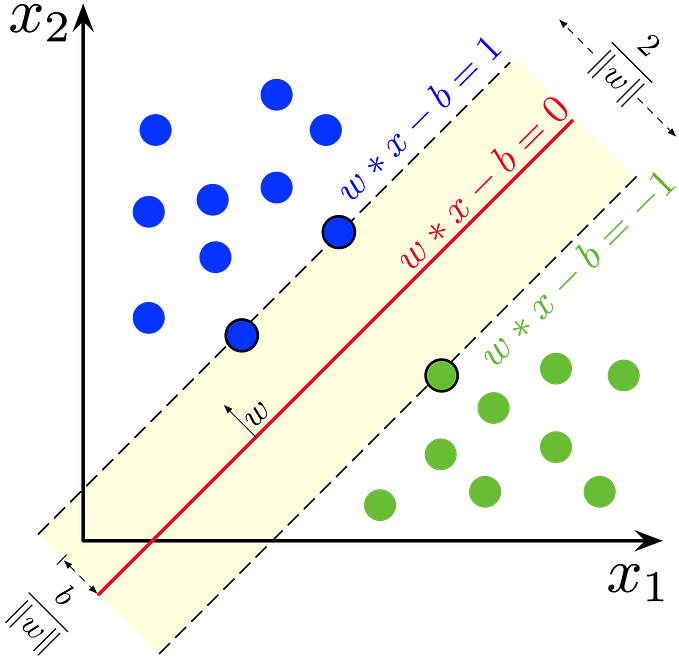

Grid Search is a model hyperparameter tuning technique in machine learning. It is used to find the best set of hyperparameters for a given model to optimize its performance on a specific task. In Grid Search, a range of values for each hyperparameter is specified and the model is trained and evaluated using each possible combination of these hyperparameters. The combination that results in the best performance is then chosen as the best set of hyperparameters for that model.

Grid Search can be seen as an exhaustive search for the optimal set of hyperparameters. It is computationally expensive and is usually used when the number of hyperparameters is relatively small. To overcome the computational cost, other techniques such as Randomized Search, Bayesian Optimization, or Genetic Algorithms can be used.

Introduction

The concept of Grid Search as a hyperparameter tuning technique has its roots in the field of machine learning and dates back to the early 2000s. It was first introduced as a simple and straightforward approach to tuning hyperparameters of machine learning algorithms. The idea behind Grid Search was to define a grid of possible values for each hyperparameter and then train and evaluate the model for each combination of these hyperparameters.

Since its introduction, Grid Search has been widely used as a standard technique for hyperparameter tuning in machine learning. Over the years, various improvements and variations of Grid Search have been proposed to address its limitations, such as the computational cost and the risk of overfitting. These include techniques such as Randomized Search, Bayesian Optimization, and others.

Despite these advancements, Grid Search remains a widely used and well-established hyperparameter tuning technique, particularly for simple models and small datasets.

Advantages

- Simple and straightforward: Grid Search is a simple and straightforward technique that is easy to understand and implement. It does not require any complex mathematical or statistical knowledge.

- Robustness: Grid Search is robust to different types of models and datasets and can be used with a wide range of machine learning algorithms.

- Versatility: Grid Search can be used for tuning a wide range of hyperparameters, including both continuous and categorical values.

- Easy to parallelize: Grid Search can be easily parallelized to speed up the process, making it suitable for large datasets.

- No prior knowledge required: Grid Search does not require any prior knowledge about the optimal hyperparameters, making it an attractive option for practitioners who do not have domain-specific knowledge.

- Interpretable results: Grid Search provides interpretable results in the form of the best set of hyperparameters and the corresponding performance metric, making it easy to understand why a particular set of hyperparameters was chosen.

Implementation

There are lots of libraries that perform Grid Search such as Sklearn, TensorFlow, etc, but what if you write your own model and could not use one of those or want a faster approach?

One approach I found interesting was, implementing Grid Search in a bash script and combining it Python class

Here is an example of how you can implement Grid Search in bash using a simple script:

# Define the range of values for each hyperparameter

param_1=(0.1 0.2 0.3)

param_2=(0.4 0.5 0.6)

# Loop through each combination of hyperparameters

for p1 in "${param_1[@]}"; do

for p2 in "${param_2[@]}"; do

# Train the model using the current combination of hyperparameters

model=$(train_model $p1 $p2)

# Evaluate the model using the current combination of hyperparameters

score=$(evaluate_model $model)

# Store the results

results+=($p1 $p2 $score)

done

done

# Find the best combination of hyperparameters

best_score=$(echo "${results[@]:2:3}" | sort -n | tail -1)

best_parameters=($(echo "${results[@]}" | grep "$best_score"))

echo "The best combination of hyperparameters is: ${best_parameters[0]} ${best_parameters[1]}"This script defines a range of values for two hyperparameters, param_1 and param_2. It then loops through each combination of these hyperparameters, trains the model using the current combination, and evaluates its performance. The results are stored in the results array and the best combination of hyperparameters is found by sorting the results array by the performance metric and selecting the highest value.

Note that train_model and evaluate_model functions should be defined to perform the actual training and evaluation of the model.

Conclusion

Grid Search is a widely used and well-established hyperparameter tuning technique in machine learning. It is a simple and straightforward approach to finding the best set of hyperparameters for a given model. While there are limitations to Grid Search, such as the computational cost and the risk of overfitting, it remains a valuable tool in the machine learning practitioner’s toolkit. The ability to parallelize the process and interpretable results make it an attractive option for many applications. Grid Search can be easily implemented in a variety of programming languages, including bash, making it accessible to a wide range of practitioners.